Softskills for technologists

What 4 straight days of using chatGPT as a coding teacher taught me

You can insult a chatbot as much as you want.

I have.

And so far, nothing bad happens.

But also, nothing good happens either.

This has been a striking thing I've noticed whenever I tried to use chatGPT for extended, multi-day, complex tasks (like creating a little SaaS product).

Is the chat stoic? Has it been trained of the triad of wise bearded men from the past?

Does it (him, her?) care when I unfurl heinous insults in ALL CAPS when it confusedly suggests I write another piece of janky code?

To be honest, I think this is an interesting question, not for the immediate consequences (being preemptively good to robots is like Pascal's wager of being pious just in case God is real).

The fact that you cannot piss off a chatbot ( bear with me, those who will say that yes you can) is interesting to me, for what it tells us about dealing with frustration.

As an evolved ape, my tools to deal with frustration are related to my social sophistication (scream or sulk), my prehensile thumbs (punch the table) and upright locomotion (go on walks around the neighborhood to cool off).

ChatGPT does not have these tools and merely apologizes. “I'm sorry for my mistake, you are right, here is the corrected code” or variations of this. The fact it is impossible to make him (it, her?) respond with aggression makes it completely useless to drum up drama.

At times, when I've extremely irritated at some minor error that keeps happening I find myself genuinely wishing I could make chatGPT feel like a moron. It's embarrassing for me to admit it but it's a real thing that has happened multiple times.

What is so disconcerting to witness in a such a laconic chatbot is that its’ dryness works. She (he, it?) messes up one time to many, I get really annoyed and curse but quickly see how futile my rage is. Things are as they are. The smart computer made a mistake and asked for forgiveness (a theme for another text, no doubt) and I breathed a little deeper for a while and we are back at it.

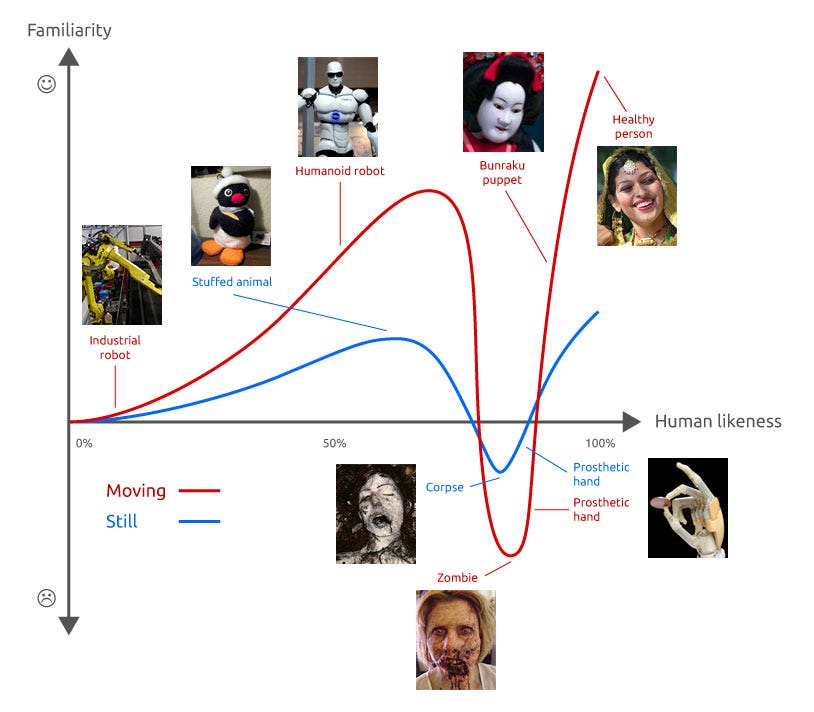

In robotics, there's this notion of Uncanny Valley that I think is useful here:

A robot that is nothing like a person does not trigger the same weirded out response that a robot that almost looks like a person does. There's a moment there, where robots are creepy, not because they are different, but because they are slightly off.

I've felt a bit like this with chatGPT and it's unwaivering loving grace.

It talks like it wants to help me, but I know it doesn't. It tells me it is sorry but that cannot be true. It displays patience towards me but nobody buys it. It's just weird.

"Weird" like someone you can't read or understand is weird. They resist classification, you can't say they are friend or foe.

But it is oh so useful. ChatGPT has been immensely useful. It's the best prosthetic I've ever used (glasses, orthopedic shoes, braces and even a lumbar support thingy for a second or two. I've used them all)

Faustian deals make all the sense now. It's clear the pull they have, how we are drawn to promises of better, faster, harder, stronger. (a song that perfectly encapsulates both what technology wants and what humans want)

In the past week or so, I've spent hour upon hour talking to chatGPT (paid version) explaining what I want to make (a little SaaS with HTML+JS+CSS front, MySQL db and Python Backend) and piece by piece putting it together. I do not know how to code. And just by talking to chatGPT in a very specific way it helped me move infinitely faster than what I'd be able to without it.

Some things worked better than others:

Explain what you want to achieve in clear terms. If you don't know exactly how to explain it, ask ChatGPT to help you understand the general way you could go about it (“I want to create a webpage where users can ask questions and have them answered by AI, how can I do that?”)

Ask for numbered responses if possible. This helps a lot with going over instructions and digging deeper on specific topics (“please expand on point 2, I don't know what to do”)

Establish rules of engagement. (“ consider you are an empathetic senior developer explaining code to junior developer. Your explanations are detailed and focus on one topic at a time. If something is too long, you break it down”)

Sometimes, the fact that ChatGPT is basically a probabilistic model of what words follow other words really shows. For instance, it could be showing me some code to correct my script, but mess up variable names, because it was trained on some generic variable (e.g. “i”) and not the ones I'm using on my code. In these cases, you need to go all socratic on it:

“friend, what is the name of the variable in my code?”

- “variable X” - says ChatGPT

- “and what is the name of the variable you gave me?”

- “I've called it “i””

and then I ask “You know what to do, right?”

- “yes, I'll change the name of “i” to “variable X” - responds chatGPT before showing me the correct code.Moving slowly. Piece by piece. Ask for help in creating a little function. Then add to it. Test it. Try to add a little more, test it.

What does not work:

Going at it full clip from the start (“I want to create a SaaS company that has login page, a register page, core system that manages everything and microservices that do X, Y and Z.").

ChatGPT is smart, but has limitations, it really struggles with working with multiple files at once (having it keep in mind variables and endpoints between different modules is actually hard)Just copying and pasting whatever it outputs. Because chatGPT is not perfect, but will always come up with an answer, it can mess up. It is very possible that it shows you code that looks right and could even work if it where a standalone thing but just doesn't work with your project.

If you don't try to understand what it is giving you and just copy-paste in your editor, you'll end up with some massive spaghetti code.Swearing, cursing or insulting ChatGPT. It understands that you're angry but will not “grow into the challenge” to serve you. It knows what it can do, but you cannot pressure it to go further.

Actually this is the most moving thing for me. It displays something that we hear about with modern software development, about the focus on people, learning what they can and cannot do and the uselessness of stressing people past what they can deliver.

Throughout History, Artificial Intelligence has been hailed as just around the corner many times. It has been pursued via diverse strategies and the current golden age stems from the advances triggered by cognitive computing and neural networks.

In short, learning about the human brain is what unleashed the recent jump in capability. It isn't all that surprising that what balanced, self-aware people do is what machines discover to be working too.